The hype train in artificial intelligence shows no signs of slowing down. Barely two weeks after GPT-5 was released, OpenAI CEO Sam Altman is already teasing the next big milestone: GPT-6. His comments come as rival labs and startups unveil new open-source models, Google expands AI into everyday apps and devices, and robotics companies show off humanoid machines that can learn and act with surprising autonomy.

The story of AI right now is one of rapid iteration, global competition, and expanding ambitions. Here’s a closer look at the latest developments shaping the sector.

GPT-6: The Next Frontier for Memory

At a private dinner with reporters in San Francisco, Altman explained that memory will be the central feature of GPT-6. While GPT-5 improved reasoning and efficiency, the next step is personalization.

“People want product features that require us to be able to understand them,” Altman said.

In practice, memory means the model will remember user preferences, communication styles, and previous interactions. Instead of prompting it repeatedly, users could expect the AI to anticipate needs, streamline workflows, and even adopt a shorthand unique to the relationship.

Industry analysts describe this as a “moat”—a protective barrier that keeps users loyal to one product. If an AI knows your goals and habits, switching to a competitor becomes less appealing.

But there’s a tradeoff. Critics warn that fully adaptive models risk creating echo chambers, similar to the way social media algorithms fed users reinforcing content. If an AI only mirrors user instructions—“be super woke” or “be extremely conservative”—it may amplify biases instead of challenging them. Balancing personalization with objectivity will be one of the most difficult challenges for frontier model labs.

China’s Counterplay: DeepSeek V3.1 and Quen Image Edit

While OpenAI prepares GPT-6, Chinese companies are accelerating their own projects.

- DeepSeek V3.1: Released as an open-weights model, it is now downloadable on Hugging Face. Though it requires massive compute to run, developers expect quantized versions that will work on smaller machines soon. DeepSeek’s higher-end “R” series has reportedly been delayed, as Beijing pressures firms to shift away from Nvidia chips and rely on Chinese hardware instead.

- Quen Image Edit: This new tool is gaining attention for its precision in photo manipulation. Unlike many generative models that alter an entire image, Quen can make tiny, targeted changes while keeping everything else intact. Demonstrations show it rotating objects to new perspectives, changing the color of a single letter in a block of text, swapping backgrounds, and even creating avatars in styles ranging from 3D cartoons to Studio Ghibli.

The consistency of results—such as rendering dozens of nearly identical penguins with only one element changed—has impressed researchers. It signals that China is not only catching up in text-based AI, but also building competitive tools in visual editing.

A Common Standard for AI Coding Agents

For developers, one of the headaches of AI coding has been fragmentation. Different platforms like Cursor, Cloud Code, Windsurf, and Factory each required their own configuration files to tell the AI how to write, style, and manage code.

Now, major players have agreed to a solution: agents.md.

This new open standard acts like a README file for AI coding agents. It gives models clear instructions on best practices, style guides, and preferences in one place. Already, OpenAI’s Codec, Google’s AMP, and several other platforms support the format.

For software teams working across multiple tools, this could significantly reduce friction and accelerate the rise of agent-driven development.

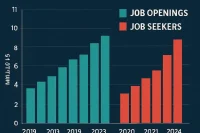

Join the AI & Robotics Revolution

With GPT-6 on the horizon, open AI models reshaping competition, and humanoid robots stepping into the spotlight, the future of work is here. Explore high-demand careers in AI, robotics, and emerging tech on WhatJobs today.

Search AI & Robotics Jobs →Google’s Opel and Gemini for the Home

Google is also pushing forward with consumer-facing AI. The company’s new product Opel allows users to build disposable mini apps using only a prompt. Opel generates a node-based workflow that can transcribe YouTube videos, generate quizzes, summarize content, or run custom scripts. Apps can be remixed and shared, making it easy for non-developers to build simple tools.

Meanwhile, Google confirmed that its Gemini model will now power all voice assistants in the home, bringing more conversational and context-aware help to households. This is part of Google’s strategy to embed AI into hardware, from smartphones to smart speakers, to compete with Apple and Amazon.

Perplexity’s “Super Memory”

Search-and-chat startup Perplexity AI is working on its own version of advanced memory. CEO Aravind Srinivas revealed that the company is in final testing for “super memory,” which early users say performs better than existing memory features in competing products.

The system recalls user details across sessions—like coursework topics or professional goals—and integrates them into future conversations. In an increasingly crowded AI market, this kind of personalization could help Perplexity stand out.

GPT-5 Solving New Math

Even as GPT-6 is teased, GPT-5 itself is making headlines. Researcher Sebastian Bubeck reported that GPT-5 Pro solved an open problem in convex optimization from a published paper. The model not only provided a valid proof, but also improved the result.

If confirmed broadly, this marks one of the first times an AI model has produced genuinely new mathematics. It suggests that frontier models may soon assist researchers in generating discoveries rather than just summarizing known knowledge.

Robots Take a Leap: Boston Dynamics and Figure 2

AI isn’t just about text and images. Robotics companies are showing how machine learning can drive autonomous physical tasks.

- Boston Dynamics’ Atlas: In a new demo, the humanoid robot opened boxes, dealt with human interference, and placed items into bins—all smoothly and at full speed. The system used “language-conditioned manipulation,” meaning it executed multi-step tasks based on simple verbal instructions.

- Figure Robotics’ Figure 2: In outdoor tests, the robot navigated uneven terrain, corrected itself when stuck, and continued walking through obstacles. Though less fluid than Atlas, it showed resilience in uncontrolled environments.

Both rely on reinforcement learning, where robots are trained first via tele-operation and then refined through large-scale model training. Industry analysts say the progress suggests humanoid robots could begin real-world deployments in logistics and manufacturing within the next decade.

Cursor’s Sonic Model

Coding platform Cursor quietly rolled out a new stealth model called Sonic. While details remain scarce, some speculate it may be linked to Elon Musk’s upcoming Grok Code project. For now, developers can test the model directly within Cursor.

OpenAI Eyes Infrastructure

Bloomberg reported that OpenAI may eventually explore selling cloud infrastructure similar to AWS, Google Cloud, or Azure. While the company is currently constrained by compute shortages, executives acknowledged that offering infrastructure could be a future revenue stream once capacity expands.

Meta Restructures AI Divisions Again

Meta is undergoing its fourth major AI reorganization in as many years, signaling ongoing tension between research ambitions and product needs.

Alexander Wang, CEO of Scale AI and now head of Meta’s AI efforts, outlined changes:

- FAIR, Meta’s research arm, will feed directly into the Meta Super Intelligence Lab.

- GitHub’s former CEO Nat Friedman will integrate AI into consumer products.

- A new infrastructure team will be led by longtime VP Aparna Ramani.

- The recently formed AGI Foundations team has already been dissolved.

For Meta, the constant churn highlights both the difficulty of competing with OpenAI and Google and the urgency of delivering useful AI across its massive user base.

Nvidia’s New Chip for China

Finally, Nvidia is adapting to U.S. export restrictions with a new chip for China. Tentatively called the B30A, it will outperform the limited H20 chip currently allowed but still remain weaker than the flagship B300 used elsewhere.

The B30A uses a single-die design and offers about half the raw computing power of the B300. While watered down, it could help Nvidia maintain its presence in China’s enormous AI market, which is under intense pressure to reduce reliance on U.S. hardware.

What It All Means

From frontier labs in San Francisco to robotics floors in Boston to AI startups in Beijing, the pace of innovation is relentless. A few key themes stand out:

- Memory is the moat. Every major AI company—from OpenAI to Perplexity to Google—is racing to build memory features that keep users loyal.

- Robotics is converging with AI. Humanoid machines like Atlas and Figure 2 are showing how language-conditioned learning can cross from software to hardware.

- Geopolitics shapes the race. Nvidia’s restricted chips, China’s push for domestic hardware, and U.S. investment in AI infrastructure all underscore the global stakes.

The road ahead is uncertain, but one thing is clear: the AI hype train is only picking up speed.

FAQs

1. What is GPT-6 expected to deliver?

Sam Altman has said memory will be its defining feature, enabling the model to recall user preferences and adapt interactions over time.

2. Why is memory such a big deal in AI?

Personalized memory makes AI tools more useful and sticky. If a system remembers your style and goals, it saves time and makes switching less appealing.

3. What is Quen Image Edit and why is it notable?

It’s a Chinese open-source image editor that can make very precise changes—like altering one letter in a block of text—while leaving everything else untouched.

4. How close are humanoid robots to real use?

Robots like Atlas and Figure 2 are still in testing, but experts predict logistics and warehouse industries may see real deployments within 5–10 years.