OpenAI Nvidia circular deals — the $1 trillion AI funding model

OpenAI Nvidia circular deals represent a revolutionary approach to AI infrastructure funding that’s creating a $1 trillion market. Unlike traditional tech companies with massive balance sheets, OpenAI lacks the financial resources of Microsoft or Google for the AI infrastructure buildout they need. Their solution? Circular funding partnerships that create a self-reinforcing investment cycle where AI companies fund each other’s growth while building the infrastructure they all need.

Understanding circular funding in AI

The traditional model breakdown

Historically, OpenAI relied entirely on Microsoft to provide all data center capacity for their compute needs and enterprise business. However, as demand scales exponentially, they’re planning three years ahead, anticipating they’ll need two to three times more capacity than current consumption levels.

The new circular approach

OpenAI’s circular funding strategy involves multiple partnerships: a $300 billion deal with Oracle spanning five years, and a groundbreaking partnership with Nvidia to build 10 gigawatts of data center capacity. The circular nature comes from Nvidia investing $10 billion (potentially up to $100 billion) in OpenAI’s infrastructure, which then drives demand for more Nvidia chips.

The $400 billion data center math

Infrastructure cost breakdown

Each gigawatt of data center capacity requires approximately $40 billion in investment. For OpenAI’s planned 10 gigawatts, the total cost reaches $400 billion. Nvidia’s contribution of $100 billion represents one-fourth of the total investment, with OpenAI needing to raise the remaining $300 billion through private vehicles or other funding instruments.

Why hyperscalers aren’t enough

Traditional hyperscalers like Microsoft, Google, and Amazon can’t provide the scale OpenAI needs. The circular funding model allows AI companies to build their own data centers rather than relying on existing cloud providers, creating more control and potentially better economics.

CapEx explosion in AI infrastructure

From $35B to $100B+ annually

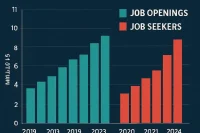

Traditional tech companies used to spend $30-35 billion annually on CapEx, representing 5-10% of revenue. AI has changed this completely. Current CapEx is already double that amount, and next year will cross $100 billion—three times larger than existing data center operators’ traditional spending.

Compute cost explosion

The fundamental driver is compute cost. Traditional search queries cost approximately $0.02 per query, while generative AI chatbot queries cost nearly 10 times more. Google’s token usage has grown 50x, creating unprecedented demand for compute infrastructure.

Hire Talent to Power the AI Infrastructure Boom

With AI CapEx soaring from $35B to $100B+, the demand for infrastructure talent has never been greater. From engineers and data center specialists to cloud architects and systems designers—companies need people ready to scale compute at unprecedented levels. Post your job on WhatJobs today and connect with professionals driving the future of AI infrastructure.

Post a Job Free for 30 Days →Private capital fills the gap

Hyperscaler limitations

Even companies like Google, which generates $100 billion in operating cash flow, now face spending more than their entire cash flow on AI infrastructure. This creates opportunities for private capital to enter the space through attractive rental and return models.

Infrastructure as an asset class

Private investors see AI infrastructure as an attractive asset because it can be rented out and generate returns over three to four years. This has spawned the entire “Neil Cloud” space, where companies rent compute capacity from specialized providers.

Market dynamics and bubble concerns

Demand still outstrips supply

Despite massive investments, demand continues to far outstrip supply. Nvidia’s earnings calls show their Hopper chip prices increasing due to overwhelming demand, indicating the market hasn’t reached saturation. This suggests current investment levels are justified by real demand rather than speculation.

Capital allocation efficiency

While some capital misallocation is inevitable in any rapidly growing sector, the current demand-supply gap makes it difficult to question the pace of AI infrastructure buildout. The market is still in the phase where investment is necessary to meet user demand.

Earnings season implications

Magnificent Seven performance patterns

Year-to-date performance shows a clear pattern: companies spending heavily on AI CapEx (Nvidia, Google) have outperformed conservative spenders (Apple, Amazon). The market is rewarding AI investment over margin optimization, suggesting this trend will continue through earnings season.

Revenue growth vs. margins

Companies showing higher revenue growth driven by AI components are seeing multiple expansion, while those focused solely on margins are experiencing multiple contraction. This creates scope for positive surprises in companies that can demonstrate AI-driven acceleration.

Concentration risk and market structure

The picks and shovels evolution

We’ve moved beyond the initial “picks and shovels” phase where infrastructure providers benefited most. Now, the focus shifts to how software companies adapt to using AI models as distribution layers and intelligence components in their tech stacks.

Market concentration concerns

While concentration risk exists with a small number of companies driving most S&P 500 gains, this reflects the reality that AI infrastructure requires massive scale and capital. The companies best positioned to provide this infrastructure naturally become dominant players.

Investment implications

Valuation considerations

While certain pockets of the market may be overvalued, individual companies in the Magnificent Seven represent wonderful businesses investing in AI with high usage potential. The key question isn’t current valuations but how they’ll monetize AI over the long term.

Rerating opportunities

Companies that successfully integrate AI as an intelligence layer and continue showing positive surprises will likely see multiple rerating, creating opportunities for investors who can identify the right adaptation strategies.

FAQs

Q: What are OpenAI Nvidia circular deals and why do they matter?

A: Circular deals involve AI companies funding each other’s infrastructure growth, with Nvidia investing $100B in OpenAI’s data centers while driving demand for more Nvidia chips.

Q: How much will OpenAI Nvidia circular deals cost in total?

A: The 10-gigawatt data center plan requires $400B total investment, with Nvidia contributing $100B and OpenAI raising the remaining $300B through private funding.

Q: Why do OpenAI Nvidia circular deals signal a $1 trillion market?

A: These deals represent a new funding model where AI companies invest in each other’s infrastructure, creating a self-reinforcing cycle that could reach $1 trillion in total AI infrastructure investment.

Q: Are OpenAI Nvidia circular deals sustainable long-term?

A: Yes, because they’re driven by real demand (Google’s token usage up 50x) and create infrastructure that can be monetized through rental models over 3-4 years.

Live example — user point of view

As an AI infrastructure investor, I’ve been tracking these circular funding deals as the new normal. The traditional model of relying on hyperscalers is breaking down because the scale requirements are too massive. OpenAI’s approach of partnering with Nvidia to build their own infrastructure while creating demand for Nvidia’s chips is brilliant—it’s a win-win that could become the template for the entire industry.