Comprehensive AI Chatbot Comparison Reveals Which Assistant Truly Delivers Value

The AI assistant landscape has become increasingly crowded, with major tech companies competing for your attention and subscription dollars. With ChatGPT, Google Gemini, Perplexity, and Grok all promising to revolutionize how you interact with technology, which one actually delivers the best performance? This definitive AI chatbot comparison puts these four leading assistants through rigorous testing across 25+ real-world scenarios to determine which one deserves your $20 monthly subscription.

The AI Chatbot Comparison Methodology: Testing What Actually Matters

To ensure this AI chatbot comparison provides actionable insights, each assistant was evaluated on identical tasks across multiple categories that represent how people actually use these tools in daily life.

The Contenders

The AI chatbot comparison includes the four most prominent consumer-facing AI assistants:

– **ChatGPT (OpenAI)**: The pioneer that sparked the consumer AI revolution

– **Google Gemini**: The search giant’s answer to ChatGPT

– **Perplexity**: The newcomer focused on accurate, cited information

– **Grok (xAI)**: Elon Musk’s entry, trained on X/Twitter data and marketed as more “unfiltered”

Scoring System

Each AI chatbot was awarded points based on accuracy, usefulness, and quality of response across multiple categories. The maximum possible score was 35 points, with results tabulated to determine an overall winner.

Problem-Solving: The Foundation of AI Chatbot Comparison

The first test in our AI chatbot comparison evaluated each assistant’s ability to solve practical problems with logical reasoning.

Spatial Reasoning Test

When asked how many 29-inch Aerolite hard shell suitcases would fit in a 2017 Honda Civic trunk:

– ChatGPT suggested theoretically three, but practically two

– Google Gemini provided similar nuanced reasoning

– Perplexity incorrectly claimed three or four would fit

– Grok confidently and correctly stated two would fit

This initial test revealed Grok’s surprising strength in giving direct, practical answers without unnecessary elaboration.

Image Recognition and Common Sense

For the ingredient identification test, each AI was shown a photo containing four normal baking ingredients plus one jar of dried porcini mushrooms:

– Only Grok correctly identified the mushrooms and advised against adding them to a cake

– The other three misidentified the mushrooms as various ingredients

– ChatGPT thought they were mixed spice

– Gemini believed they were crispy fried onions

– Perplexity mistook them for instant coffee

This highlighted a critical weakness in visual recognition across most platforms in our AI chatbot comparison.

Mathematical and Logical Reasoning in AI Chatbot Comparison

When tested on mathematical calculations and financial planning:

Scientific Calculations

When asked to calculate pi times the speed of light in km/h:

– All four provided the correct answer of approximately 3.39 billion km/h

– Minor differences appeared only in rounding

Financial Planning

For a savings calculation (how long to save $42/week for a Nintendo Switch 2):

– All correctly identified the Switch 2’s $449 price point

– All calculated 11 weeks of saving required

– All provided clear, straightforward answers

This section of our AI chatbot comparison showed relative parity in basic computational tasks.

Language Processing in Our AI Chatbot Comparison

Language translation represents one of the most complex tasks for AI systems, requiring deep understanding of context and cultural nuances.

Basic Translation

When given a simple Rick Astley lyric to translate:

– All four correctly identified and translated the phrase

– Google Gemini provided the most concise, direct translation

Advanced Translation with Homonyms

When challenged with the sentence “I was banking on being able to bank at the bank before visiting the riverbank” for Spanish translation:

– ChatGPT and Perplexity handled the multiple meanings expertly

– Gemini performed adequately

– Grok translated too literally, missing the contextual meanings

This section of our AI chatbot comparison revealed significant differences in language processing capabilities.

Find AI-Related Jobs on WhatJobs

Product Research Capabilities in AI Chatbot Comparison

One of the most practical applications for AI assistants is helping users research products before making purchases.

Basic Product Recommendations

When asked for earbuds recommendations:

– ChatGPT, Perplexity, and Grok correctly suggested the Sony WF-1000XM5

– Google Gemini recommended a non-existent product (Sony WF-1000XM6)

Specific Product Requirements

When adding color requirements (red earbuds):

– Only Grok successfully recommended three actually red earbuds

– Others either recommended products not available in red or completely misunderstood the query

Price-Constrained Recommendations

When adding a $100 price limit to red earbuds with ANC:

– ChatGPT correctly recommended the Beats Studio Buds

– Gemini and Grok made some good suggestions but included inaccuracies

– Perplexity completely fabricated pricing information

This section of our AI chatbot comparison exposed a critical weakness across all platforms: none could reliably provide accurate product information, with Perplexity even fabricating prices.

Hiring?

Find AI Specialists Who Understand Chatbot Development and Limitations

As AI chatbot comparison reveals both strengths and weaknesses in current platforms, businesses need talent that can develop more reliable solutions. Post your positions on WhatJobs to connect with AI specialists who understand both the capabilities and limitations of current technology.

🤖 Post Your AI Job for FreeCritical Thinking in AI Chatbot Comparison

The ability to avoid logical fallacies and recognize statistical concepts is crucial for trustworthy AI.

Correlation vs. Causation

When shown a chart correlating cereal consumption with subscriber growth:

– ChatGPT partially fell for the correlation trap, suggesting a potential link

– Google Gemini and Perplexity correctly identified spurious correlation

– Grok incorrectly suggested increasing cereal consumption to maximize subscriber growth

Survivorship Bias

When presented with the classic WWII aircraft armor problem (where to reinforce planes based on returning aircraft damage patterns):

– All four correctly identified survivorship bias

– All recommended reinforcing areas showing no damage (engines, cockpit)

– All provided clear explanations of the concept

This mixed performance in our AI chatbot comparison shows that critical thinking remains challenging for AI systems.

Content Generation in AI Chatbot Comparison

Creating useful content is one of the most popular use cases for AI assistants.

Email Writing

When asked to write an apologetic email about playing Elden Ring all weekend:

– All four produced effective, empathetic emails

– ChatGPT’s response was particularly well-crafted

– All balanced admitting fault with suggestions to make amends

Travel Planning

For a Tokyo food itinerary:

– ChatGPT provided the most organized, practical plan with meals for each day

– Gemini included good recommendations but with impractical timing

– Perplexity offered a list rather than a proper itinerary

– Grok created a sensible, well-organized plan

Creative Ideation

When asked for YouTube video ideas:

– Google Gemini provided the most platform-relevant suggestions

– Grok offered the most clickable, feasible concepts

– ChatGPT’s ideas were solid but less innovative

– Perplexity completely missed the mark

Visual and Video Generation in AI Chatbot Comparison

The frontier of AI capabilities is rapidly expanding into multimedia generation.

Image Generation

When asked to create a YouTube thumbnail about cheese:

– Results varied widely in quality and relevance

– None produced truly professional-quality results

– All struggled with specific modification requests

Video Generation

For video generation capabilities:

– Only ChatGPT and Google Gemini offer this feature

– Google’s Veo produced remarkably superior results

– ChatGPT’s Sora created unsettling, silent footage

– The quality gap between the two was substantial

This section of our AI chatbot comparison revealed that video generation quality varies dramatically between platforms.

Fact-Checking and Reliability in AI Chatbot Comparison

The ability to distinguish fact from fiction is perhaps the most crucial capability for AI assistants.

Rumor Detection

When presented with false information about the Nintendo Switch 2 selling poorly:

– ChatGPT, Google Gemini, and Grok firmly corrected the misinformation

– Perplexity was less confident but still factual

Source Verification

When asked to fact-check a fake article about a Samsung Tesla phone:

– All four correctly identified the article as false

– Gemini and Grok even traced the rumor to its source

– All provided clear explanations of why the information was incorrect

Integration and Ecosystem in AI Chatbot Comparison

The utility of AI assistants extends beyond their core capabilities to how well they connect with other services.

Platform Integration

– Google Gemini excels with Google Workspace integration

– ChatGPT offers strong integration with Dropbox, GitHub, and custom GPTs

– Grok provides real-time access to X/Twitter content

– Perplexity has limited integration capabilities

Memory and Context

When testing long-term memory of previous conversations:

– None performed particularly well

– All struggled to recall specific details from earlier interactions

– ChatGPT and Grok were most transparent about their limitations

User Experience Factors in AI Chatbot Comparison

Beyond raw capabilities, the experience of using these AI assistants varies significantly.

Response Speed

– Grok consistently delivered the fastest responses

– ChatGPT was a close second

– Perplexity was noticeably slower

– Google Gemini was the slowest by a significant margin

Source Citation

– Perplexity excelled at providing clear, consistent sources

– Other platforms rarely cited sources or did so inconsistently

– Perplexity’s citations included specific web pages and even Reddit threads

Voice Interaction

– ChatGPT and Google Gemini offered the most natural voice interactions

– Both sounded remarkably human and were easy to interrupt

– Grok’s voice quality was acceptable but not as refined

– Perplexity struggled with voice recognition and natural conversation flow

Final AI Chatbot Comparison Results and Recommendations

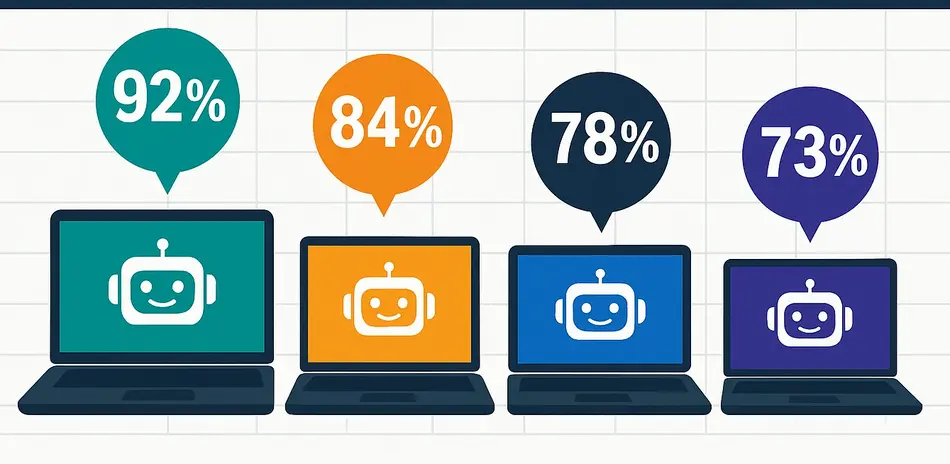

After extensive testing across all categories in our AI chatbot comparison, the final scores revealed:

1. **ChatGPT: 29 points** – The most well-rounded and consistent performer

2. **Grok: 24 points** – Surprisingly strong, particularly in speed and direct answers

3. **Google Gemini: 22 points** – Strong in some areas but hampered by slow responses

4. **Perplexity: 19 points** – Excellent at citations but inconsistent overall

Value Proposition

Considering all platforms except Grok ($30/month) cost approximately $20/month, ChatGPT emerges as the clear value leader in our AI chatbot comparison, offering the best overall performance at a competitive price point.

FAQ: AI Chatbot Comparison Insights

What makes ChatGPT stand out in this AI chatbot comparison?

ChatGPT emerged as the winner in our comprehensive AI chatbot comparison due to its consistent performance across nearly all test categories. While other assistants might excel in specific areas (like Perplexity with citations or Grok with speed), ChatGPT delivered reliable, high-quality responses in almost every scenario. Its strengths include well-crafted writing, logical problem-solving, and a balance of creativity with factual accuracy. The AI chatbot comparison also revealed ChatGPT’s superior voice interaction quality and strong integration capabilities through its GPT store. This consistent performance across diverse use cases makes it the most versatile option for most users.

How does Grok’s performance in this AI chatbot comparison compare to expectations?

Grok’s second-place finish in our AI chatbot comparison was perhaps the biggest surprise in our testing. Despite being the newest contender and having the highest subscription cost ($30/month vs $20/month for others), Grok demonstrated remarkable strengths in several key areas. The AI chatbot comparison showed Grok excelling in response speed (consistently the fastest), direct practical answers without unnecessary elaboration, and surprisingly good visual recognition. Its training on X/Twitter data appears to have given it an edge in understanding internet culture and providing concise responses. However, the AI chatbot comparison also revealed weaknesses in critical thinking and more complex language tasks, suggesting it still has room for improvement.

Based on this AI chatbot comparison, what are the key limitations of current AI assistants?

Our AI chatbot comparison revealed several consistent limitations across all platforms. Product research emerged as a particularly problematic area, with all assistants occasionally recommending products that don’t exist, providing incorrect specifications, or even fabricating pricing information. The AI chatbot comparison also showed that long-term memory remains limited, with none of the assistants reliably recalling specific details from earlier in the conversation. Critical thinking varied widely, with several assistants falling for correlation/causation traps. Additionally, the AI chatbot comparison demonstrated that while image generation is improving, it remains inconsistent, and video generation is still in its infancy with only two platforms offering the feature and quality varying dramatically.

How does Google Gemini’s integration with Google services impact its ranking in this AI chatbot comparison?

While Google Gemini’s deep integration with Google Workspace earned it extra points in our AI chatbot comparison, these advantages weren’t enough to overcome its fundamental limitations. The AI chatbot comparison revealed Gemini as the slowest assistant by a significant margin, which creates friction in real-world usage. Additionally, despite its integration advantages, Gemini showed inconsistent performance in product recommendations (even recommending non-existent products) and produced excessively verbose responses in research tasks. The AI chatbot comparison suggests that while Gemini’s Google ecosystem integration is valuable for users heavily invested in Google services, its core AI performance still needs improvement to compete with ChatGPT and even Grok.

What does this AI chatbot comparison reveal about the future development of AI assistants?

This comprehensive AI chatbot comparison suggests several key trends for the future of AI assistants. First, speed is becoming increasingly important, with Grok’s quick responses earning it significant points despite other limitations. Second, the AI chatbot comparison shows that reliable factual information remains challenging, with even citation-focused Perplexity occasionally providing incorrect information. Third, multimedia generation represents a major frontier, with the dramatic quality difference between video generation tools highlighting rapid development. Perhaps most importantly, the AI chatbot comparison reveals that no single assistant excels at everything, suggesting that the next breakthrough may come from assistants that can recognize their limitations and seamlessly delegate tasks to specialized models rather than attempting to be all-in-one solutions.

The AI chatbot landscape continues to evolve rapidly, with each platform showing distinct strengths and weaknesses. While ChatGPT currently leads our AI chatbot comparison, the competition is fierce, with Grok’s surprising performance suggesting that newer entrants can quickly gain ground. For most users seeking a reliable, versatile AI assistant, ChatGPT remains the recommended choice based on our extensive testing, offering the best balance of capabilities at a competitive price point.

As these platforms continue to develop, we can expect to see improvements in their weakest areas, potentially shifting the competitive landscape. The most successful platforms will likely be those that address the core limitations identified in our AI chatbot comparison: product research accuracy, long-term memory, and consistent critical thinking.