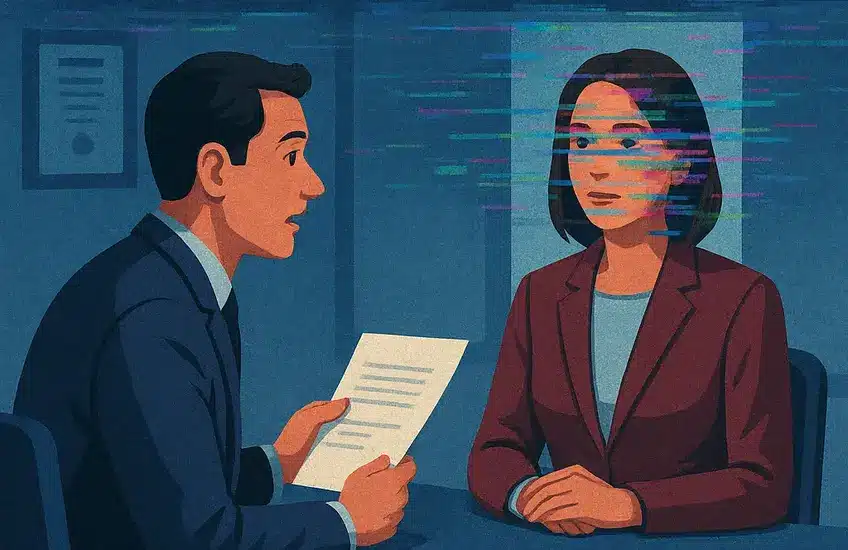

In a shocking revelation for employers worldwide, deepfake job candidates are rapidly infiltrating the hiring process, with experts predicting that by 2028, a staggering 1 in 4 job applicants worldwide will be fake. This disturbing trend poses significant threats to corporate security, financial stability, and even national security as sophisticated AI technology makes it increasingly difficult to distinguish between genuine applicants and fraudulent imposters.

The Growing Threat of Deepfake Job Candidates

The hiring landscape has dramatically transformed since the pandemic, with remote work becoming a permanent fixture in many industries. While this shift has expanded talent pools globally, it has also created a dangerous vulnerability that bad actors are eagerly exploiting. Research shows that currently, 16.8% of job applicants are fake – meaning approximately one out of every six candidates applying to your company is misrepresenting their identity, qualifications, or location.

“Deepfake candidates are infiltrating the job market at a crazy, unprecedented rate,” warns cybersecurity experts tracking this phenomenon. “They are real people seeking jobs, but they take on a different persona when they come on screen, and that is enabled by deepfake technology.”

How Deepfake Technology Works in Job Applications

Creating a deepfake for job interviews has become alarmingly simple. All that’s required is a static image and a few seconds of audio to generate convincing video manipulation that can fool even experienced hiring managers. These technologies allow fraudulent applicants to:

- Present as someone from a different geographical location

- Assume stolen identities of qualified professionals

- Hide their true nationality, especially if from sanctioned countries

- Bypass visa requirements and work authorization checks

Real-World Examples of Deepfake Job Scams

The threat isn’t theoretical – companies are already falling victim to these sophisticated scams. One particularly revealing case involved a hiring manager at Vidoc Security Lab who became suspicious during a video interview:

“Something was really off. I wanted to have proof that he was a real person or not,” the hiring manager recounted. “The first thing that came to my mind was, ‘Let’s put your hand in front of your face,’ because I knew AI tools that change your face like Snapchat filters won’t work when you partially occlude the face.”

When the candidate refused this simple request, it confirmed the interviewer’s suspicions that they were dealing with a deepfake applicant.

The North Korean Connection

Perhaps most concerning is the state-sponsored element of some deepfake job scams. In May 2024, the Justice Department revealed that over 300 U.S. companies had unknowingly hired imposters with ties to North Korea for remote IT roles, resulting in at least $6.8 million being funneled to the North Korean regime.

Cybersecurity company KnowBe4 shared their own experience with a North Korean IT worker who used a stolen U.S. identity:

“He had a really good résumé. We also asked him to submit credentials so that we could go through a background verification. The initial phone interviews went really well,” a representative explained. However, within minutes of the new “employee” turning on their company laptop, IT security detected a password-stealing trojan being installed.

The Financial and Security Implications

The consequences of hiring deepfake candidates extend far beyond wasted recruitment resources. These security breaches can:

- Provide unauthorized access to sensitive company data

- Enable installation of malicious code or backdoors into corporate systems

- Result in direct financial fraud through payroll theft

- Fund illicit activities in sanctioned nations

- Damage algorithmic systems through adversarial AI attacks

Financial experts project that AI-generated fraud, including deepfake tactics, could cost the U.S. financial sector up to $40 billion by 2027, a dramatic increase from $12.3 billion in 2023.

Detecting Deepfake Applicants

As deepfake technology becomes more sophisticated, detection methods must evolve accordingly. Voice authentication startup Pindrop Security has been at the forefront of identifying these fraudulent candidates, including one they nicknamed “Ivan X” who claimed to be from the U.S. but was actually located in Russia near the North Korean border.

According to Pindrop’s research, of all candidates they evaluate, 1 in 343 is linked to North Korea, and among those, 1 in 4 used deepfake technology during live interviews.

Impact on the Hiring Landscape

The proliferation of deepfake job candidates threatens to fundamentally alter hiring practices, potentially reversing many of the gains made in remote work flexibility.

“If this trend continues and if we will experience more and more fake candidates, every company that is hiring remotely will need to adjust their hiring processes, and probably they will have to switch to offline interviews as if it was before Covid,” suggests one industry expert.

Potential Consequences for Legitimate Job Seekers

The rise in deepfake applications creates significant challenges for genuine job seekers as well:

- Longer, more complex verification processes

- Increased suspicion from employers toward remote candidates

- Potential bias against international applicants

- Higher likelihood of false rejections due to heightened security measures

- Fewer remote opportunities as companies revert to in-person interviews

“The whole reason you need to worry about deepfake job seekers is, at the very least, they’re making it harder for real employees and potential candidates to get jobs easily,” notes one employment analyst. “It can create all kinds of disruption, making the hiring process longer and more expensive.”

Protecting Your Organization from Deepfake Candidates

With the threat of deepfake job candidates continuing to grow, organizations must implement robust verification protocols to protect themselves. Here are essential strategies for safeguarding your hiring process:

- Implement multi-factor identity verification that goes beyond standard video interviews

- Develop unexpected interview techniques that can catch deepfakes off-guard, such as requesting specific actions during video calls

- Conduct thorough background checks with direct verification from previous employers

- Use specialized AI detection software designed to identify deepfake technology

- Consider hybrid interview approaches that combine remote and in-person components for final candidates

For more detailed guidance on cybersecurity best practices, visit the Cybersecurity and Infrastructure Security Agency (CISA) website.

Red Flags to Watch For

Hiring managers should remain vigilant for these warning signs that might indicate a deepfake candidate:

- Inconsistent lighting or strange visual artifacts around the face during video calls

- Audio that doesn’t perfectly sync with lip movements

- Reluctance to perform simple actions like turning the head at certain angles

- Excessive focus on remaining remote without valid reasons

- Inconsistencies between interview performance and resume qualifications

The Future of Secure Hiring in the Age of Deepfakes

As AI technology continues to advance, the battle between deepfake creators and detection methods will intensify. Organizations must stay informed about emerging threats while balancing security concerns with fair hiring practices.

“We might also see, unfortunately, biases in hiring because, for the worry of hiring a fake candidate, we might actually see a preference for hiring locally and therefore having all the interviews in person,” cautions one expert.

The challenge moving forward will be maintaining the benefits of global talent access while implementing sufficient safeguards against increasingly sophisticated deepfake technologies.

Collaborative Solutions

Addressing this growing threat will require cooperation between:

- Technology companies developing better detection tools

- Government agencies establishing regulatory frameworks

- Industry associations sharing best practices and threat intelligence

- Educational institutions raising awareness among future hiring professionals

Hiring? Protect Your Company from Deepfake Candidates

Post jobs securely with WhatJobs and access our advanced verification tools to ensure you’re connecting with genuine candidates. Our platform includes built-in safeguards against fraudulent applications.

Post Jobs NowFAQ: Deepfake Job Candidates

What exactly are deepfake job candidates and how do they operate?

Deepfake job candidates use artificial intelligence technology to alter their appearance and voice during video interviews, allowing them to impersonate others or conceal their true identity. They typically apply for remote positions where they can avoid in-person verification, using stolen credentials and sophisticated AI tools to create convincing digital personas during the hiring process.

How common are deepfake job candidates in today’s hiring landscape?

Research indicates that approximately 16.8% of job applicants are fake, meaning one out of every six candidates may be misrepresenting themselves. Gartner predicts this problem will grow significantly, with projections suggesting that by 2028, one in four job candidates worldwide will be fake.

What are the security risks associated with hiring deepfake job candidates?

Hiring deepfake job candidates creates several serious security vulnerabilities, including unauthorized access to sensitive company data, installation of malicious code or backdoors into corporate systems, direct financial fraud through payroll theft, funding of illicit activities in sanctioned nations, and potential damage to company algorithms through adversarial AI attacks.

How can companies detect and prevent deepfake job candidates during the hiring process?

Companies can protect themselves from deepfake job candidates by implementing multi-factor identity verification, developing unexpected interview techniques (like asking candidates to perform specific actions that typically confuse deepfake technology), conducting thorough background checks with direct verification, using specialized AI detection software, and considering hybrid interview approaches that combine remote and in-person components.

This blog post provides a comprehensive analysis of the growing threat of deepfake job candidates, offering valuable insights for employers and job seekers navigating this challenging new aspect of the hiring landscape.